Remember when ChatGPT first broke cover in late 2022 – the excitement, astonishment, puzzlement at what a mere machine could suddenly do? And then the attendant feelings of dread, anger, anxiety and denialism that struck teachers and academic administrations everywhere. This, they fumed, was a tool custom-built to enable cheating on a global scale.

In an academic world – especially the humanities – built on assessing students on the basis of written essays, how would we be able to assign grades when machine-written essays would be undetectable and in some cases much better than what the average student could produce on their own? Since this would be cheating, they concluded, the technology should be banned.

Thus did academia slam the stable door, apparently without troubling itself to reflect upon whether there might be alternative ways of grading student performance. Students, for their part, saw the technology as heaven-sent, and went for it like ostriches that had stumbled upon a hoard of brass doorknobs (as PG Wodehouse would have put it).

A survey carried out this year by the Higher Education Policy Institute thinktank found 92% of UK students are using AI in some form, up from 66% in 2024, and 88% have used tools such as ChatGPT for assessments, up from 53% in 2024. The main uses of the tools are explaining concepts, summarising articles and suggesting research ideas, but a significant proportion of students – 18% – have included AI-generated text directly in their work.

This kind of penetration has led the American technology blogger and author Nicholas Carr, an astute observer of these things, to conclude that “AI may be the most rapidly adopted educational tool since the pencil”.

Nevertheless, institutional resistance to the technology continues apace. If you’re ever looking for examples of dogged reluctance to change, universities would be a good place to start. In too many institutions, the discourse about AI is stuck in an unproductive doom loop. It’s time for a reset.

First off, universities need to accept that denialism isn’t a strategy. AI has arrived and has compelling potential for good as well as evil. In other words, it’s just a technology and obeys Melvin Kranzberg’s first law: that it “is neither good nor bad; nor is it neutral”. Second, equating AI use with “cheating” may make sense institutionally, for what are modern universities but credentialling institutions? But this doesn’t provide a convincing riposte to students, who are also customers of universities and are understandably desperate to obtain the right credentials.

A more convincing argument against using AI to write essays is that those who resort to it are, in fact, cheating themselves. “The pedagogical value of a writing assignment,” as Carr puts it, “doesn’t lie in the tangible product of the work – the paper that gets handed in at the assignment’s end. It lies in the work itself: the critical reading of source materials, the synthesis of evidence and ideas, the formulation of a thesis and an argument and the expression of thought in a coherent piece of writing.”

Or to put it differently, there are two kinds of writer: those who know what they think and write it, and those who find out what they think by trying to write it. Most of us belong in the latter category. Which implies that using an AI tool to do the writing means that little of that painful but vital learning process happens.

That doesn’t mean that tools such as large language models aren’t useful; they are. But they should be playing a different role in the educational process, as what Pennsylvania University’s Wharton business school professor Ethan Mollick calls “co-intelligences”: machines that students use as partners in the intellectual work that leads to qualifications.

Newsletters

Choose the newsletters you want to receive

View more

For information about how The Observer protects your data, read our Privacy Policy

But for that to work, students need to know much more about the knowledge domains they are studying, so that they are in a position to evaluate what their machine partners come up with – not least because machines can at times make stuff up.

There’s already good evidence that to get the best out of AI tools, you need to have an adequate knowledge of the domain that you, and they, are exploring. Only then do the two of you add up to more than the sum of your parts.

It’s not rocket science. But it does require a change of mindset, and an acceptance that AI has a useful role to play in higher education, not as generators of suspect essays and theses, but as energetic assistants in processes of inquiry.

Whether universities are ready for such a realignment is another question. Maybe I should put it to ChatGPT.

What I’m reading

Thoreau road

Is It Possible to Read Walden When You Own a Smartphone? is a nice essay by Rebecca Baumgartner on trying to go back to Walden Pond.

Cannes laughter

Louis Pisano’s Substack post The Bezos Cannes-tastrophe recounts the farcical spectacle of Jeff Bezos and his fiancee miming environmentalism in Cannes

Positive vibe

An interesting essay by Derek Willis about using a large language model to help revise computer code is Vibe Coding for Domain Experts.

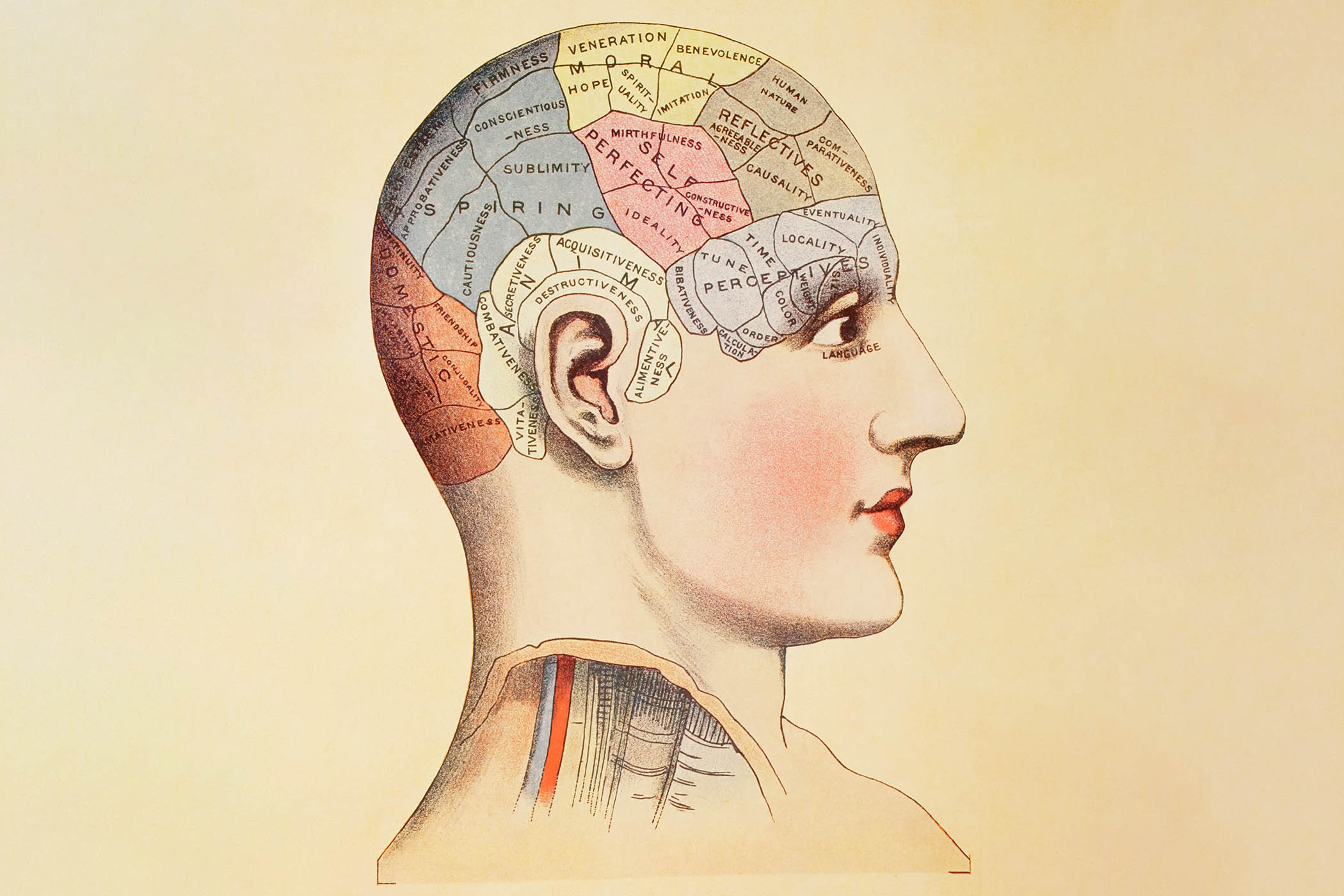

Photograph by Getty